随着人工智能技术的发展,AI大模型的应用已经渗透到各行各业。今天,我想分享一下我在为曲靖M的CMS系统开发一个基于AI大模型的聊天应用过程中的一些经验与心得。这个项目中,我们选择了DeepSeek-R1作为我们的AI合作伙伴,并且前端采用了Vue 2.0框架以及Element UI主题风格来构建用户界面。

项目背景

在开始之前,让我们先了解一下项目的背景和目标。曲靖M是一家领先的数字内容管理系统提供商,他们希望引入最新的AI技术来提升用户体验。因此,我们的任务是集成DeepSeek-R1的大模型能力,创建一个智能聊天机器人,以提供更加个性化的服务和支持。

技术选型

- 后端AI引擎:deepseek-r1-distill-qwen-32b

- 前端框架:Vue.js 2.0

- UI库:Element UI

选择这些技术的原因在于它们各自的优点:DeepSeek提供了强大的自然语言处理能力;Vue 2.0因其易学性和灵活性而被广泛使用;Element UI则提供了一套美观、一致的组件,极大地加快了开发速度。

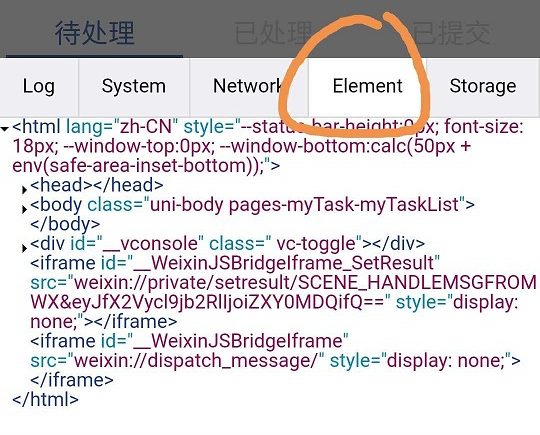

开发实现

<template>

<div class="chat-container">

<div id="scrollArea" class="response-area">

<div class="response-prompt">

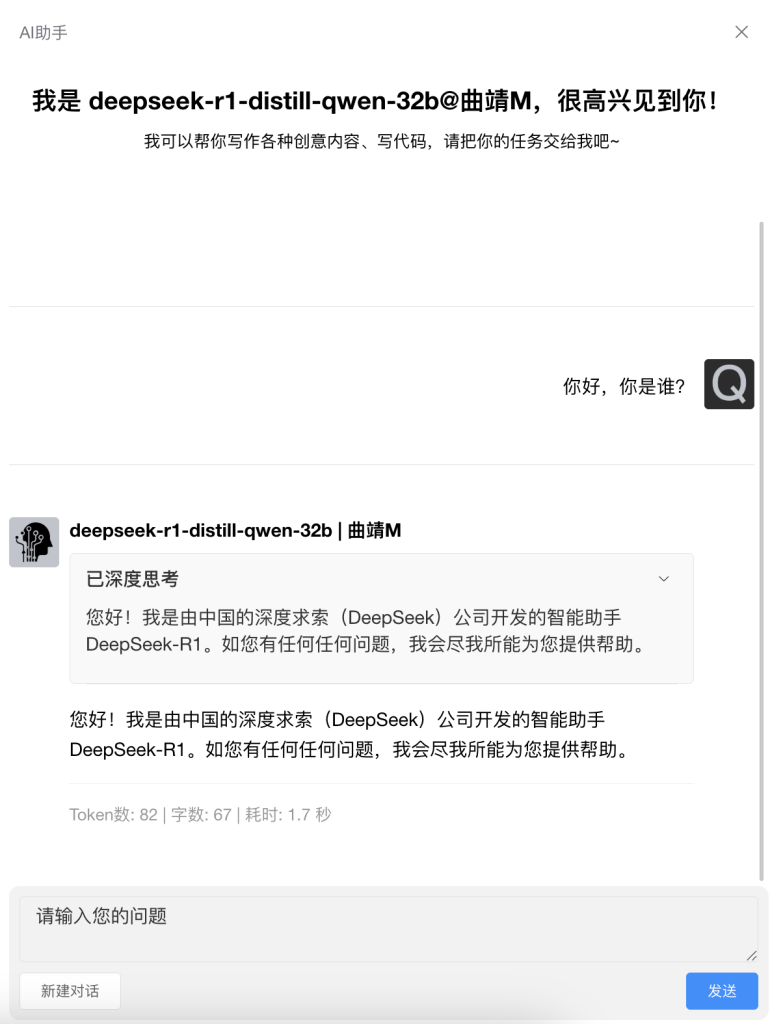

<h1>我是 {{ model }}@曲靖M,很高兴见到你!</h1>

<div class="response-prompt__des">我可以帮你写作各种创意内容、写代码,请把你的任务交给我吧~</div>

</div>

<!-- 对于每个回复,显示在一个卡片中,并附带token数、字数、耗时和思维链 -->

<div v-for="(item, key, index) in responseDetails" :key="index" class="response-card">

<div v-if="item.role === 'user'" class="response-card-question">

<div class="response-card-question__content">

<div class="response-card-question__content-wrap">

<div class="response-card-question__content-wrap__text" v-text="item.content" />

</div>

</div>

<div class="response-card-question__avatar">

<el-avatar shape="square" :size="48" src="https://img.qujingm.com/1/1/q_icon.png" class="response-card-question__avatar-img" />

</div>

</div>

<div v-else class="response-card-answer">

<div class="response-card-answer__avatar">

<el-avatar shape="square" :size="48" src="https://img.qujingm.com/1/1/ai_icon.png" class="response-card-answer__avatar-img" />

</div>

<div class="response-card-answer__main">

<div class="response-card-answer__main-meta">

<div class="response-card-answer__main-meta__modelname">{{ model }} | 曲靖M</div>

</div>

<el-collapse v-model="item.showCollapseItem" accordion class="response-card-answer__main-think">

<el-collapse-item name="thinking">

<template slot="title">

<div class="response-card-answer__main-think__status">

<div class="response-card-answer__main-think__status-info">

<span v-if="item.status === 'thinking'">思考中</span>

<span v-else>已深度思考</span>

</div>

<loading-loop v-if="item.status === 'thinking'" class="response-card-answer__main-think__status-progress" />

</div>

</template>

<div class="response-card-answer__main-think__content" v-html="item.chainOfThought" />

</el-collapse-item>

</el-collapse>

<vue-markdown :source="item.content" class="response-card-answer__main-content" />

<div class="response-card-answer__main-info">

Token数: {{ item.tokenCount }} | 字数: {{ item.wordCount }} | 耗时: {{ item.duration }} 秒

</div>

</div>

</div>

</div>

</div>

<div ref="questionArea" class="question-area">

<div class="question-area-box">

<el-input

v-model="question"

type="textarea"

:autosize="{ minRows: 2, maxRows: 10 }"

placeholder="请输入您的问题"

class="question-area__input"

@keyup.enter.native="askQuestion"

/>

<div class="question-area__tools">

<div class="question-area__tools-box">

<div>

<el-button style="margin-top: 10px;" @click="refreshChat">新建对话</el-button>

</div>

<el-button type="primary" :loading="answering" style="margin-top: 10px;" @click="askQuestion">发送</el-button>

</div>

</div>

</div>

</div>

</div>

</template>

<script>

import VueMarkdown from 'vue-markdown'

import LoadingLoop from '@/components/LoadingLoop'

// const systemPrompt = { role: 'system', content: '你是一个专业的文章创作助手' }

export default {

name: 'AiChat',

components: { VueMarkdown, LoadingLoop },

data() {

return {

model: 'deepseek-r1-distill-qwen-32b',

question: '',

conversationHistory: [],

startTime: 0,

responseDetails: {}, // 存储每个回复的详情,包括内容、token数、字数、耗时、思维链和状态

currentReplyIndex: '', // 当前正在构建的回复索引

currentChainOfThought: '', // 当前正在构建的思维链内容

currentFormalContent: '', // 当前正在构建的正式回复

currentReplayRole: '', // 当前回复的角色

answering: false

}

},

watch: {

questionContent: {

handler(val) {

if (val.trim()) {

this.question = val

this.askQuestion()

}

},

immediate: true

}

},

// created() {

// this.conversationHistory.push(systemPrompt)

// },

methods: {

askQuestion() {

if (this.answering) {

this.$notify({

title: '提示',

message: '正在回复中,请稍后'

})

return

}

this.answering = true

this.$store.dispatch('app/updateQuestionContent', '')

if (this.question.trim()) {

this.conversationHistory.push({ role: 'user', content: this.question })

this.startTime = performance.now() // 记录开始时间

this.$set(this.responseDetails, this.startTime + '', {

role: 'user',

content: this.question

})

this.getResponse(this.question)

this.question = ''

} else {

this.$message({

message: '请输入你的问题',

type: 'warning'

})

}

},

async getResponse() {

try {

const response = await fetch('https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

// 根据实际情况添加必要的请求头或认证信息

'Authorization': 'Bearer Your API Key'

},

body: JSON.stringify({

model: this.model,

messages: this.conversationHistory,

stream: true,

stream_options: {

include_usage: true

}

})

})

const reader = response.body.getReader()

const decoder = new TextDecoder('utf-8')

let partialMessage = ''

let done = false

while (!done) {

const { value, done: doneReading } = await reader.read()

done = doneReading

const chunkValue = decoder.decode(value, { stream: true })

partialMessage += chunkValue

// 分割成可能的完整JSON对象

const lines = partialMessage.split('\n')

partialMessage = lines.pop() || '' // 最后一行可能是未完成的

for (let line of lines) {

if (line.trim()) {

// 检查是否是结束标志

if (line === 'data: [DONE]') {

done = true

break

}

// 移除"data: "前缀

if (line.startsWith('data: ')) {

line = line.slice('data: '.length)

}

try {

const parsedMsg = JSON.parse(line)

this.currentReplyIndex = parsedMsg.id || '1'

this.handleParsedMessage(parsedMsg)

const scrollArea = document.getElementById('scrollArea')

scrollArea.scrollTo({

top: scrollArea.scrollHeight,

behavior: 'smooth'

})

} catch (parseError) {

console.error('Failed to parse a message', parseError, 'on line:', line)

}

}

}

}

this.conversationHistory.push({ role: this.currentReplayRole, content: this.currentFormalContent })

} catch (error) {

console.error('Error fetching the response', error)

} finally {

this.currentReplyIndex = ''

this.currentChainOfThought = ''

this.currentFormalContent = ''

this.currentReplayRole = ''

this.answering = false

}

},

handleParsedMessage(parsedMsg) {

if (parsedMsg.choices && parsedMsg.choices.length > 0) {

if (parsedMsg.choices[0].finish_reason === 'stop') {

this.$set(this.responseDetails, this.currentReplyIndex, {

status: 'stop',

showCollapseItem: '',

chainOfThought: this.currentChainOfThought,

content: this.currentFormalContent,

promptTokenCount: 0,

completionTokenCount: 0,

tokenCount: 0,

wordCount: 0,

duration: ((Math.floor(performance.now() - this.startTime)) / 1000).toFixed(1)

})

} else if (parsedMsg.choices[0].delta) {

const delta = parsedMsg.choices[0].delta

const reasoningContent = delta.reasoning_content || ''

const formalContent = delta.content || ''

const role = delta.role || ''

if (role.length > 0) {

this.currentReplayRole = role

this.$set(this.responseDetails, this.currentReplyIndex, {

role: this.currentReplayRole,

status: 'thinking',

showCollapseItem: 'thinking',

chainOfThought: this.currentChainOfThought,

content: this.currentFormalContent,

promptTokenCount: 0,

completionTokenCount: 0,

tokenCount: 0,

wordCount: 0,

duration: ((Math.floor(performance.now() - this.startTime)) / 1000).toFixed(1)

})

}

if (reasoningContent.length > 0) {

this.currentChainOfThought += reasoningContent

this.$set(this.responseDetails, this.currentReplyIndex, {

role: this.currentReplayRole,

status: 'thinking',

showCollapseItem: 'thinking',

chainOfThought: this.currentChainOfThought,

content: this.currentFormalContent,

promptTokenCount: 0,

completionTokenCount: 0,

tokenCount: 0,

wordCount: 0,

duration: ((Math.floor(performance.now() - this.startTime)) / 1000).toFixed(1)

})

} else if (formalContent.length > 0) {

this.currentFormalContent += formalContent

this.$set(this.responseDetails, this.currentReplyIndex, {

role: this.currentReplayRole,

status: 'reasoning',

showCollapseItem: '',

chainOfThought: this.currentChainOfThought,

content: this.currentFormalContent,

promptTokenCount: 0,

completionTokenCount: 0,

tokenCount: 0,

wordCount: 0,

duration: ((Math.floor(performance.now() - this.startTime)) / 1000).toFixed(1)

})

}

}

} else {

const promptTokens = parsedMsg.usage.prompt_tokens || 0

const completionTokens = parsedMsg.usage.completion_tokens || 0

const totalTokens = parsedMsg.usage.total_tokens || 0

this.$set(this.responseDetails, this.currentReplyIndex, {

role: this.currentReplayRole,

status: 'stop',

showCollapseItem: '',

chainOfThought: this.currentChainOfThought,

content: this.currentFormalContent,

promptTokenCount: promptTokens,

completionTokenCount: completionTokens,

tokenCount: totalTokens,

wordCount: this.currentFormalContent.length,

duration: ((Math.floor(performance.now() - this.startTime)) / 1000).toFixed(1)

})

}

},

refreshChat() {

this.$emit('refresh')

}

}

}

</script>

<style lang="scss" scoped>

.chat-container {

width: 100%;

height: calc(100vh - 76px);

background-color: #ffffff;

padding: 0 10px;

display: flex;

flex-direction: column;

justify-content: space-between;

}

.response-area {

overflow-y: scroll;

}

.response-prompt {

padding: 150px 0 150px 0;

h1 {

font-size: 24px;

text-align: center;

}

&__des {

text-align: center;

}

}

.response-card {

max-width: 750px;

padding: 50px 0;

margin: 0 auto;

border-top: 1px solid #dddddd;

&-question {

display: flex;

flex-direction: row;

justify-content: flex-end;

&__content {

min-height: 48px;

display: flex;

flex-direction: column;

justify-content: center;

&-wrap {

text-align: right;

padding-left: 58px;

&__text {

display: inline-block;

text-align: left;

font-size: 18px;

line-height: 26px;

white-space: pre-wrap;

}

}

}

&__avatar {

&-img {

margin-left: 10px;

}

}

}

&-answer {

margin-bottom: 10px;

display: flex;

flex-direction: row;

&__avatar {

&-img {

margin-right: 10px;

padding: 5px;

}

}

&__main {

flex: 1;

padding-right: 58px;

&-meta {

display: flex;

flex-direction: column;

line-height: 24px;

&__modelname {

font-size: 18px;

font-weight: bolder;

}

&__time {

color: #999999;

}

}

&-think {

margin: 10px 0 20px 0;

border: 1px solid #e2e2e2;

border-radius: 5px;

padding: 0 15px;

background-color: #f9f9f9;

/deep/.el-collapse-item__header {

background-color: transparent;

border: 0;

}

/deep/.el-collapse-item__wrap {

background-color: transparent;

}

&__status {

display: flex;

flex-direction: row;

align-items: center;

&-info {

font-size: 18px;

}

&-progress {

width: 200px;

margin-left: 20px;

}

}

&__content {

font-size: 18px;

line-height: 26px;

white-space: pre-wrap;

}

}

&-content {

line-height: 160%;

font-size: 18px;

}

&-info {

border-top: 1px dotted #e2e2e2;

padding-top: 20px;

color: #999999;

}

}

}

}

.question-area {

margin-bottom: 10px;

&-box {

display: flex;

flex-direction: column;

background-color: #f2f2f2;

padding: 10px;

border-radius: 10px;

max-width: 750px;

margin: 0 auto;

}

&__input {

/deep/.el-textarea__inner {

font-size: 18px;

line-height: 26px;

background-color: #f2f2f2;

color: #000000;

&::placeholder {

color: #333333;

font-size: 18px;

}

}

}

&__tools {

&-box {

display: flex;

flex-direction: row;

justify-content: space-between;

}

}

}

</style>结语

通过这次开发,我不仅深入了解了DeepSeek的强大功能,也体验到了Vue.js与Element UI结合带来的高效开发流程。希望这篇博客能够为你带来一些灵感和帮助。如果你有任何问题或建议,请随时留言交流!

0